~www_lesswrong_com | Bookmarks (705)

-

Rant: the extreme wastefulness of high rent prices — LessWrong

Published on May 25, 2025 5:04 PM GMT09:46: Everyone wants to be close to everyone else...

-

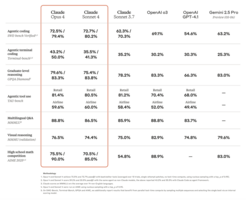

Claude 4 You: Safety and Alignment — LessWrong

Published on May 25, 2025 2:00 PM GMTUnlike everyone else, Anthropic actually Does (Some of) the...

-

Alignment Proposal: Adversarially Robust Augmentation and Distillation — LessWrong

Published on May 25, 2025 12:58 PM GMTEpistemic Status: Over years of reading alignment plans and...

-

Meditations on Doge — LessWrong

Published on May 25, 2025 12:00 PM GMTLessons from shutting down institutions in Eastern Europe.This is...

-

Lie Detectors. Technical solutions to the cooperation problem. — LessWrong

Published on May 24, 2025 8:05 PM GMTThe purpose of this post is to argue for...

-

Case Studies in Simulators and Agents — LessWrong

Published on May 25, 2025 5:40 AM GMTSimulators was posted two and a half years ago...

-

On safety of being a moral patient of ASI — LessWrong

Published on May 24, 2025 9:24 PM GMTI have noticed that there are talks around about...

-

We Need a Baseline for LLM-Aided Experiments — LessWrong

Published on May 24, 2025 8:52 PM GMTThere has recently been a back-and-forth over Claude 4...

-

Default history is dead wrong — LessWrong

Published on May 23, 2025 4:31 PM GMTThere is a default historic grand narrative that goes...

-

Notes on Claude 4 System Card — LessWrong

Published on May 23, 2025 3:23 PM GMTAnthropic released Claude 4. I've read the accompanying system...

-

What is emptiness? — LessWrong

Published on May 23, 2025 12:06 PM GMTThe value of philosophy is that no one needs...

-

Idiohobbies — LessWrong

Published on May 23, 2025 6:38 AM GMTWhen you get to know someone, you might ask...

-

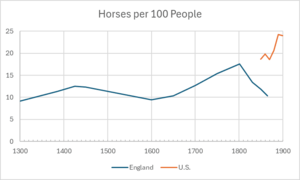

Learning (more) from horse employment history — LessWrong

Published on May 23, 2025 2:11 AM GMTThe economist Wassily Leontief, writing in 1966, used the...

-

Qualitative Fit Testing — LessWrong

Published on May 23, 2025 2:50 AM GMT As I wrote about last week, it's worth...

-

Anthropic is Quietly Backpedalling on its Safety Commitments — LessWrong

Published on May 23, 2025 2:26 AM GMTDiscuss

-

Schizobench: Documenting Magical-Thinking Behavior in Claude 4 Opus — LessWrong

Published on May 23, 2025 1:31 AM GMTWith today's release of the new Claude models, we've...

-

Post-Manifest coworking at Mox — LessWrong

Published on May 23, 2025 12:20 AM GMTMox (https://moxsf.com) is fully open to the public in...

-

Claude 4, Opportunistic Blackmail, and "Pleas" — LessWrong

Published on May 22, 2025 7:59 PM GMTIn the recently published Claude 4 model card:Notably, Claude...

-

Reward button alignment — LessWrong

Published on May 22, 2025 5:36 PM GMTIn the context of model-based RL agents in general,...

-

We're Not Advertising Enough (Post 3 of 6 on AI Governance) — LessWrong

Published on May 22, 2025 5:05 PM GMTIn my previous post in this series, I explained...

-

Claude 4 — LessWrong

Published on May 22, 2025 5:00 PM GMTClaude Sonnet 4 and Claude Opus 4 are out....

-

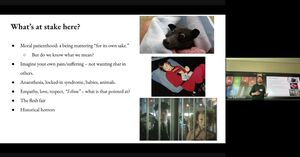

Video and transcript of talk on AI welfare — LessWrong

Published on May 22, 2025 4:15 PM GMTThis is the video and transcript of a talk...

-

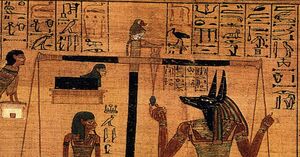

What we can learn from afterlife myths — LessWrong

Published on May 22, 2025 3:49 PM GMTOverviewThe "Modal Rationalist Anti-Death Stance" goes something like this:Since...

-

Policy recommendations regarding reproductive technology — LessWrong

Published on May 22, 2025 2:49 PM GMTPDF version. berkeleygenomics.org. X.com. Bluesky. Introduction Here we list...