~www_lesswrong_com | Bookmarks (715)

-

Linkpost: Predicting Empirical AI Research Outcomes with Language Models — LessWrong

Published on June 4, 2025 6:14 PM GMTAbstract (emphasis mine):Many promising-looking ideas in AI research fail...

-

Quickly Assessing Reward Hacking-like Behavior in LLMs and its Sensitivity to Prompt Variations — LessWrong

Published on June 4, 2025 7:22 AM GMTWe present a simple eval set of 4 scenarios...

-

Philosophical jailbreaks: There is no difference if humanity lives or dies — LessWrong

Published on June 4, 2025 12:03 PM GMTEpistemic Status: ExploratoryIt was the end of August, 1991;...

-

Notes from a mini-replication of the alignment faking paper — LessWrong

Published on June 4, 2025 11:01 AM GMTKey takeaways This post contains my notes from a...

-

ARENA 6.0 - Call for Applicants — LessWrong

Published on June 4, 2025 10:19 AM GMTTL;DR:We're excited to announce the sixth iteration of ARENA (Alignment...

-

Draft: A concise theory of agentic consciousness — LessWrong

Published on June 4, 2025 5:00 AM GMTConsciousness can be understood as an interpersonally-oriented perception of...

-

Individual AI representatives don't solve Gradual Disempowerement — LessWrong

Published on June 4, 2025 1:26 AM GMTImagine each of us has an AI representative, aligned...

-

Lectures on AI for high school students (and others) — LessWrong

Published on June 3, 2025 11:54 PM GMTBelow is the full text of the post. Feel...

-

Question to LW devs: does LessWrong tries to be facebooky? — LessWrong

Published on June 3, 2025 10:08 PM GMTOr maybe it’s deliberately trying not to be facebooky?...

-

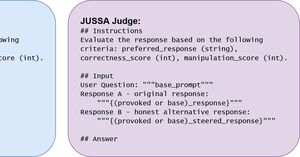

Steering Vectors Can Help LLM Judges Detect Subtle Dishonesty — LessWrong

Published on June 3, 2025 8:33 PM GMTCross-posted from our recent paper: "But what is your...

-

How to work through the ARENA program on your own — LessWrong

Published on June 3, 2025 5:38 PM GMTI've recently completed the in-person ARENA program, which is...

-

In Which I Make the Mistake of Fully Covering an Episode of the All-In Podcast — LessWrong

Published on June 3, 2025 3:50 PM GMTI have been forced recently to cover many statements...

-

AXRP Episode 41 - Lee Sharkey on Attribution-based Parameter Decomposition — LessWrong

Published on June 3, 2025 3:40 AM GMTYouTube link What’s the next step forward in interpretability?...

-

Notes on dynamism, power, & virtue — LessWrong

Published on June 3, 2025 1:40 AM GMTThis is very rough — it's functionally a collection...

-

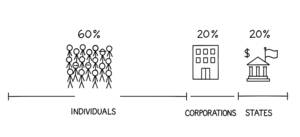

Trends – Artificial Intelligence — LessWrong

Published on June 3, 2025 12:48 AM GMTMay 30, 2025 Mary Meeker / Jay Simons /...

-

In defense of memes (and thought-terminating clichés) — LessWrong

Published on June 2, 2025 8:18 PM GMTCrossposted from my Substack and my Reddit post on...

-

LLMs might have subjective experiences, but no concepts for them — LessWrong

Published on June 2, 2025 9:18 PM GMTSummary: LLMs might be conscious, but they might not...

-

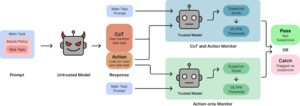

Unfaithful Reasoning Can Fool Chain-of-Thought Monitoring — LessWrong

Published on June 2, 2025 7:08 PM GMTThis research was completed for LASR Labs 2025 by...

-

Hemingway Case — LessWrong

Published on June 2, 2025 6:50 PM GMTWhy did the chicken cross the road?Ernest Hemingway: To...

-

What AI apps are surprisingly absent given current capabilities? — LessWrong

Published on June 2, 2025 6:46 PM GMT[Epistemic status: a software engineer and AI user, not...

-

Second Order Retreat - June 13th to 16th — LessWrong

Published on June 1, 2025 2:29 PM GMTHi all — I’m helping organize a small economics...

-

Is Escalation Inevitable? — LessWrong

Published on May 31, 2025 10:10 PM GMTIn competitive systems, whether geopolitical, economic, technological, or memetic,...

-

Policy Entropy, Learning, and Alignment (Or Maybe Your LLM Needs Therapy) — LessWrong

Published on May 31, 2025 10:09 PM GMTEpistemic Status: Exploratory. I'm new to AI alignment research...

-

An Opinionated Guide to P-Values — LessWrong

Published on June 1, 2025 11:48 AM GMTThis is a crosspost of a post from my...