Second Order Retreat - June 13th to 16th — LessWrong

Published on June 1, 2025 2:29 PM GMTHi all — I’m helping organize a small economics...

Is Escalation Inevitable? — LessWrong

Published on May 31, 2025 10:10 PM GMTIn competitive systems, whether geopolitical, economic, technological, or memetic,...

Policy Entropy, Learning, and Alignment (Or Maybe Your LLM Needs Therapy) — LessWrong

Published on May 31, 2025 10:09 PM GMTEpistemic Status: Exploratory. I'm new to AI alignment research...

An Opinionated Guide to P-Values — LessWrong

Published on June 1, 2025 11:48 AM GMTThis is a crosspost of a post from my...

Legal Personhood for Models: Novelli et. al & Mocanu — LessWrong

Published on June 1, 2025 8:18 AM GMTIn a previous article I detailed FSU Law professor...

The Unseen Hand: AI's Problem Preemption and the True Future of Labor — LessWrong

Published on May 31, 2025 10:04 PM GMTI study Economics and Data Science at the University...

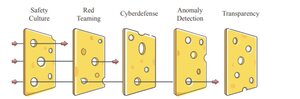

The 80/20 playbook for mitigating AI scheming in 2025 — LessWrong

Published on May 31, 2025 9:17 PM GMTAdapted from this twitter thread. See this as a...

The best approaches for mitigating "the intelligence curse" (or gradual disempowerment); my quick guesses at the best object-level interventions — LessWrong

Published on May 31, 2025 6:20 PM GMTThere have recently been various proposals for mitigations to "the intelligence curse"...

How Epistemic Collapse Looks from Inside — LessWrong

Published on May 31, 2025 4:30 PM GMTThere’s a story — I'm at a conference and...

When will AI automate all mental work, and how fast? — LessWrong

Published on May 31, 2025 4:18 PM GMTRational Animations takes a look at Tom Davidson's Takeoff...

50 Ideas for Life I Repeatedly Share — LessWrong

Published on May 30, 2025 4:57 PM GMTThese are the most significant pieces of life advice/wisdom...

Virtues related to honesty — LessWrong

Published on May 30, 2025 2:11 PM GMTStatus: musings. I wanted to write up a more...

AI 2027 - Rogue Replication Timeline — LessWrong

Published on May 30, 2025 1:46 PM GMTI envision a future more chaotic than portrayed in...

Letting Kids Be Kids — LessWrong

Published on May 30, 2025 10:50 AM GMTLetting kids be kids seems more and more important...

The Geometry of LLM Logits (an analytical outer bound) — LessWrong

Published on May 30, 2025 1:21 AM GMTThe Geometry of LLM Logits (an analytical outer bound)...

Experimental CFAR Mini-Workshop @ Arbor Summer Camp — LessWrong

Published on May 30, 2025 12:23 AM GMTFrom June 2-6, the Center for Applied Rationality will...

CFAR is running an experimental mini-workshop (June 2-6, Berkeley CA)! — LessWrong

Published on May 29, 2025 10:02 PM GMTHello from the Center for Applied Rationality!Some of you...

Orphaned Policies (Post 5 of 6 on AI Governance) — LessWrong

Published on May 29, 2025 9:42 PM GMTIn previous posts in this sequence, I laid out...

Gradual Disempowerment: Concrete Research Projects — LessWrong

Published on May 29, 2025 6:55 PM GMTThis post benefitted greatly from comments, suggestions, and ongoing...

Do you even have a system prompt? (PSA / repo) — LessWrong

Published on May 29, 2025 6:49 PM GMTEveryone around me has a notable lack of system...

Fun With Veo 3 and Media Generation — LessWrong

Published on May 28, 2025 6:30 PM GMTSince Claude 4 Opus things have been refreshingly quiet....

What LLMs lack — LessWrong

Published on May 28, 2025 4:19 PM GMTIntroductionI have long been very interested in the limitations...

Playlist Inspired by Manifest 2024 — LessWrong

Published on May 28, 2025 4:03 PM GMTOkay, I think it's time to stop polishing this...

AISN #56: Google Releases Veo 3 — LessWrong

Published on May 28, 2025 4:00 PM GMTWelcome to the AI Safety Newsletter by the Center...

How Self-Aware Are LLMs? — LessWrong

Published on May 28, 2025 12:57 PM GMTAn interim research reportSummaryWe introduce a novel methodology for...

Can We Hack Hedonic Treadmills? — LessWrong

Published on May 28, 2025 11:42 AM GMTDuring a visit to a Hong Kong children’s welfare...

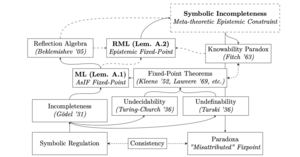

Provability Inclusion as a Short Analogy — LessWrong

Published on May 28, 2025 10:50 AM GMTThe following analogy is intended to illustrate a novel...

AI’s goals may not match ours — LessWrong

Published on May 28, 2025 9:30 AM GMTContext: This is a linkpost for https://aisafety.info/questions/NM3I/6:-AI%E2%80%99s-goals-may-not-match-ours This is an...

AI may pursue goals — LessWrong

Published on May 28, 2025 9:30 AM GMTContext: This is a linkpost for https://aisafety.info/questions/NM3J/5:-AI-may-pursue-goals This is an...

The Best Way to Align an LLM: Inner Alignment is Now a Solved Problem? — LessWrong

Published on May 28, 2025 6:21 AM GMTThis is a link-post for a new paper I...

Poetic Methods II: Rhyme as a Focusing Device — LessWrong

Published on May 26, 2025 6:29 PM GMTAs promised in the previous instalment on meter, let’s...

Is Building Good Note-Taking Software an AGI-Complete Problem? — LessWrong

Published on May 26, 2025 6:26 PM GMTIn my experience, the most annoyingly unpleasant part of...

Does the Universal Geometry of Embeddings paper have big implications for interpretability? — LessWrong

Published on May 26, 2025 6:20 PM GMTRishi Jha, Collin Zhang, Vitaly Shmatikov and John X....

Socratic Persuasion: Giving Opinionated Yet Truth-Seeking Advice — LessWrong

Published on May 26, 2025 5:38 PM GMTThe full post is long, but you can 80/20...

An observation on self-play — LessWrong

Published on May 26, 2025 5:22 PM GMTAt NeurIPS 2024, Ilya Sutskever delivered a short keynote...

[Beneath Psychology] Case study on chronic pain: First insights, and the remaining challenge — LessWrong

Published on May 26, 2025 5:29 PM GMTIn the last post I took the seemingly-naive stance...

Asking for AI Safety Career Advice — LessWrong

Published on May 26, 2025 3:26 PM GMTHi! I'm a rising junior in undergrad, working on...

New website analyzing AI companies' model evals — LessWrong

Published on May 26, 2025 4:00 PM GMTI'm making a website on AI companies' model evals...

New scorecard evaluating AI companies on safety — LessWrong

Published on May 26, 2025 4:00 PM GMTThe new scorecard is on my website, AI Lab Watch....

Nerve Blisters: A Stoic Response — LessWrong

Published on May 26, 2025 3:07 PM GMTThe chickenpox virus waited for decades, attacking the moment...

Consider buying voting shares — LessWrong

Published on May 25, 2025 6:01 PM GMTOne of the best and easiest ways to influence...

Can you donate to AI advocacy? — LessWrong

Published on May 25, 2025 5:54 PM GMTI posted a quick take that advocacy may be...

Rant: the extreme wastefulness of high rent prices — LessWrong

Published on May 25, 2025 5:04 PM GMT09:46: Everyone wants to be close to everyone else...

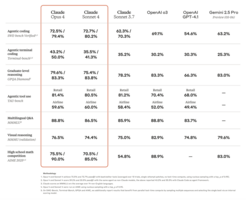

Claude 4 You: Safety and Alignment — LessWrong

Published on May 25, 2025 2:00 PM GMTUnlike everyone else, Anthropic actually Does (Some of) the...

Alignment Proposal: Adversarially Robust Augmentation and Distillation — LessWrong

Published on May 25, 2025 12:58 PM GMTEpistemic Status: Over years of reading alignment plans and...

Meditations on Doge — LessWrong

Published on May 25, 2025 12:00 PM GMTLessons from shutting down institutions in Eastern Europe.This is...

Lie Detectors. Technical solutions to the cooperation problem. — LessWrong

Published on May 24, 2025 8:05 PM GMTThe purpose of this post is to argue for...

Case Studies in Simulators and Agents — LessWrong

Published on May 25, 2025 5:40 AM GMTSimulators was posted two and a half years ago...

On safety of being a moral patient of ASI — LessWrong

Published on May 24, 2025 9:24 PM GMTI have noticed that there are talks around about...

We Need a Baseline for LLM-Aided Experiments — LessWrong

Published on May 24, 2025 8:52 PM GMTThere has recently been a back-and-forth over Claude 4...