~www_lesswrong_com | Bookmarks (706)

-

The Silent War: AGI-on-AGI Warfare and What It Means For Us — LessWrong

Published on March 15, 2025 3:24 PM GMTBy A. NobodyIntroductionThe emergence of Artificial General Intelligence (AGI)...

-

Why Billionaires Will Not Survive an AGI Extinction Event — LessWrong

Published on March 15, 2025 6:08 AM GMTBy A. NobodyIntroductionThroughout history, the ultra-wealthy have insulated themselves...

-

Paper: Field-building and the epistemic culture of AI safety — LessWrong

Published on March 15, 2025 12:30 PM GMTAbstractThe emerging field of “AI safety” has attracted public...

-

AI4Science: The Hidden Power of Neural Networks in Scientific Discovery — LessWrong

Published on March 14, 2025 9:18 PM GMTAI4Science has the potential to surpass current frontier models (text,...

-

The Dangers of Outsourcing Thinking: Losing Our Critical Thinking to the Over-Reliance on AI Decision-Making — LessWrong

Published on March 14, 2025 11:07 PM GMTI’ve become so reliant on a GPS that using...

-

Report & retrospective on the Dovetail fellowship — LessWrong

Published on March 14, 2025 11:20 PM GMTIn September last year I posted an ad for...

-

LLMs may enable direct democracy at scale — LessWrong

Published on March 14, 2025 10:51 PM GMTAmerican democracy currently operates far below its theoretical ideal....

-

2024 Unofficial LessWrong Survey Results — LessWrong

Published on March 14, 2025 10:29 PM GMTThanks to everyone who took the Unofficial 2024 LessWrong...

-

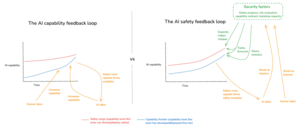

AI Tools for Existential Security — LessWrong

Published on March 14, 2025 6:38 PM GMTRapid AI progress is the greatest driver of existential...

-

AI for AI safety — LessWrong

Published on March 14, 2025 3:00 PM GMT(Audio version here (read by the author), or search...

-

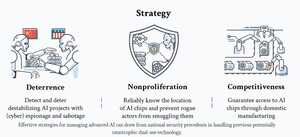

On MAIM and Superintelligence Strategy — LessWrong

Published on March 14, 2025 12:30 PM GMTDan Hendrycks, Eric Schmidt and Alexandr Wang released an...

-

Whether governments will control AGI is important and neglected — LessWrong

Published on March 14, 2025 9:48 AM GMTEpistemic status: somewhat rushed out in advance of the...

-

Something to fight for — LessWrong

Published on March 14, 2025 8:27 AM GMTA short science fiction story illustrating that if we...

-

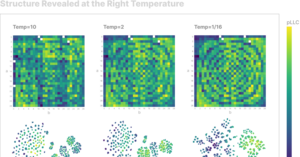

Interpreting Complexity — LessWrong

Published on March 14, 2025 4:52 AM GMTThis is a cross-post - as some plots are...

-

Bike Lights are Cheap Enough to Give Away — LessWrong

Published on March 14, 2025 2:10 AM GMT While in a more remote area bike lights...

-

Should AI safety be a mass movement? — LessWrong

Published on March 13, 2025 8:36 PM GMTWhen communicating about existential risks from AI misalignment, is...

-

Auditing language models for hidden objectives — LessWrong

Published on March 13, 2025 7:18 PM GMTWe study alignment audits—systematic investigations into whether an AI...

-

Vacuum Decay: Expert Survey Results — LessWrong

Published on March 13, 2025 6:31 PM GMTDiscuss

-

A Frontier AI Risk Management Framework: Bridging the Gap Between Current AI Practices and Established Risk Management — LessWrong

Published on March 13, 2025 6:29 PM GMTWe (SaferAI) propose a risk management framework which we...

-

Creating Complex Goals: A Model to Create Autonomous Agents — LessWrong

Published on March 13, 2025 6:17 PM GMTWhy do adults pursue long-term and complex goals? People...

-

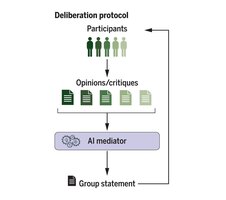

Habermas Machine — LessWrong

Published on March 13, 2025 6:16 PM GMTThis post is a distillation of a recent work...

-

The "Reversal Curse": you still aren't antropomorphising enough. — LessWrong

Published on March 13, 2025 10:24 AM GMTI scrutinise the so-called "reversal curse", wherein LLMs seem...

-

AI #107: The Misplaced Hype Machine — LessWrong

Published on March 13, 2025 2:40 PM GMTThe most hyped event of the week, by far,...

-

Intelsat as a Model for International AGI Governance — LessWrong

Published on March 13, 2025 12:58 PM GMTIf there is an international project to build artificial...