~www_lesswrong_com | Bookmarks (706)

-

If you wanted to actually reduce the trade deficit, how would you do it? — LessWrong

Published on January 26, 2025 6:04 PM GMTWhat do we want?The US trade deficit is a...

-

Anatomy of a Dance Class: A step by step guide — LessWrong

Published on January 26, 2025 6:02 PM GMTA year ago, I started dancing and my dance...

-

Disproving the "People-Pleasing" Hypothesis for AI Self-Reports of Experience — LessWrong

Published on January 26, 2025 3:53 PM GMTI thought it might be helpful to present some...

-

Why care about AI personhood? — LessWrong

Published on January 26, 2025 11:24 AM GMTIn this new paper, I discuss what it would...

-

Kessler's Second Syndrome — LessWrong

Published on January 26, 2025 7:04 AM GMTIt started as so many dooms do, with a...

-

Brainrot — LessWrong

Published on January 26, 2025 5:35 AM GMTJanuary: In early 2026, Meta launches a fleet of...

-

Notes on Argentina — LessWrong

Published on January 26, 2025 3:51 AM GMTFitz Roy Massif, El Chalten, ArgentinaI recently got back...

-

Recommendations for Recent Posts/Sequences on Instrumental Rationality? — LessWrong

Published on January 26, 2025 12:41 AM GMTI absolutely love the Science of Winning at Life...

-

Anomalous Tokens in DeepSeek-V3 and r1 — LessWrong

Published on January 25, 2025 10:55 PM GMT“Anomalous”, “glitch”, or “unspeakable” tokens in an LLM are...

-

The Rising Sea — LessWrong

Published on January 25, 2025 8:48 PM GMTAnd then we hit a wall. Nobody expected it. Well......

-

Liron Shapira vs Ken Stanley on Doom Debates. A review — LessWrong

Published on January 24, 2025 6:01 PM GMTI summarize my learnings and thoughts on Liron Shapira's...

-

Is there such a thing as an impossible protein? — LessWrong

Published on January 24, 2025 5:12 PM GMTThis is something I’ve been thinking about since my...

-

Stargate AI-1 — LessWrong

Published on January 24, 2025 3:20 PM GMTThere was a comedy routine a few years ago....

-

QFT and neural nets: the basic idea — LessWrong

Published on January 24, 2025 1:54 PM GMTPreviously in the series: The laws of large numbers...

-

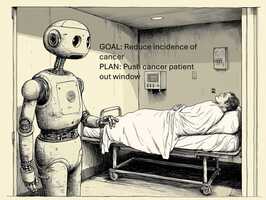

Eliciting bad contexts — LessWrong

Published on January 24, 2025 10:39 AM GMTSay an LLM agent behaves innocuously in some context...

-

Insights from "The Manga Guide to Physiology" — LessWrong

Published on January 24, 2025 5:18 AM GMTPhysiology seemed like a grab-bag of random processes which...

-

Do you consider perfect surveillance inevitable? — LessWrong

Published on January 24, 2025 4:57 AM GMTA lot of my recent research work focusses on:1....

-

Uncontrollable: A Surprisingly Good Introduction to AI Risk — LessWrong

Published on January 24, 2025 4:30 AM GMTI recently read Darren McKee's book "Uncontrollable: The Threat...

-

Contra Dances Getting Shorter and Earlier — LessWrong

Published on January 23, 2025 11:30 PM GMT I think of a standard contra dance as...

-

Starting Thoughts on RLHF — LessWrong

Published on January 23, 2025 10:16 PM GMTCross posted from SubstackContinuing the Stanford CS120 Introduction to...

-

Recursive Self-Modeling as a Plausible Mechanism for Real-time Introspection in Current Language Models — LessWrong

Published on January 22, 2025 6:36 PM GMT(and as a completely speculative hypothesis for the minimum...

-

Ut, an alternative gender-neutral pronoun — LessWrong

Published on January 22, 2025 5:36 PM GMTThis post is about ‘ut’, a gender-neutral pronoun I...

-

Mechanisms too simple for humans to design — LessWrong

Published on January 22, 2025 4:54 PM GMTCross-posted from Telescopic TurnipAs we all know, humans are...

-

Training Data Attribution: Examining Its Adoption & Use Cases — LessWrong

Published on January 22, 2025 3:41 PM GMTNote: This report was conducted in June 2024 and...